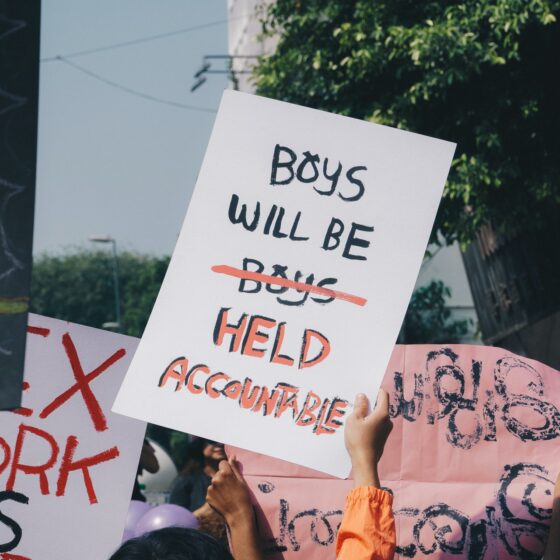

#MeToo broke the silence around sexual assault and harassment, giving survivors space online to reclaim their story. Four years after the wave of Weinstein allegations that re-invigorated the words of Tarana Burke’s 2006 movement, we are seeing a shift in speakers, the beginning of legislative change, and crucial cultural unlearning.

Many of us now talk openly about boundaries, sexual harassment in the workplace, reporting assault, and the underpinning power that exists in the gender and race pay gap. #MeToo makes the scale of sexual assault and abuse of power impossible to ignore with the issue glaring right in front of us.

The same cannot be said of deepfakes. In the same time that we have witnessed the momentum of the #MeToo movement online, the methods of digital violence have been rapidly evolving. The difficulty with this image-based abuse is that it occurs out of frame, often without our knowing, and the technological advances mean that our neurological ability to make distinctions between what is real and ‘fake’ is being challenged.

Back in November 2017, the word ‘deepfake’ emerged from a user on Reddit who started a forum following the growing trends of images being digitally transposed onto separate, secondary material. The portmanteau refers to the ‘deep learning’ of AI and ‘fake’ final image. Today, deepfakes exist in the ecosystem of our online communication. We’ve grown accustomed to seeing public figures’ speeches being reproduced and ventriloquised in memes and clips on social media platforms. In 2019, the altered video of Democrat and US House Speaker, Nancy Pelosi went viral. The House Speaker’s words were slowed down to give the impression of incompetency or cognitive confusion. Upon seeing the so-called ‘cheap-fake’, in which media has been edited using conventional technology, the former U.S. President Donald Trump retweeted the video, actively spreading the disinformation that ‘Nervous Nancy’ Pelosi was not mentally fit enough to fulfill her role.

Over the last year, the use of deepfakes has increased at a staggering pace. Just last week, AI firm Sensity released a report revealing that 85,047 fake videos had been recorded as of December 2020, with the production rate doubling every two months. And, truthfully, we shouldn’t be surprised by these statistics. In fact, some of us have probably used the same algorithms in the recent face-swapping trend in which apps allow you to impose a picture of yourself into a famous movie scene.

Scrolling on your phone you may flick past a deepfake video, but we all need to pay more attention to how the same algorithms can be used to spread disinformation and personal images without consent.

We know that online abuse disproportionately affects women. U.K. charity Glitch reports that women are 27 times more likely to be harassed online and according to a UN report on cyber violence 73 percent of women have experienced some form of online violence. Non-consensual deepfake porn is invasive, harmful and humiliating and yet, in the U.K. there is no justice for this profound violation.

It has become easy for anyone to make a deepfake and to inflict digital sexual violence. In 2019, Sensity found that 96 percent of deepfakes are of pornographic content and used to target women are girls who are overwhelmingly the most vulnerable category to this digital violence. Apps such as DeepNude, which launched in 2018, can be downloaded for free and simply require a single upload to generate a graphic deepfake. Although the company was forced to go offline due to media exposure, the app software was sold on and continues to produce deepfakes on other platforms.

Tech companies have made moves to tackle deepfake disinformation and digital violence, with Twitter introducing a ban and Microsoft developing tools that can be used to detect deepfakes. However, at the current rate in which deepfakes are adapting, tech companies have a responsibility to act now to protect their users from the potential oncoming dangers of its development.

Similar action needs to be taken across all pornography platforms. Pornhub recently came under fire in December for platforming non-consensual pornography. Following The New York Times’ investigation, Pornhub denied the claims, removed uploads from unverified accounts and then released a statement praising its leading safeguarding techniques.

Despite the company’s claims to advanced safeguarding, more needs to be done to protect victims of deepfakes. Even though the company banned deepfakes in 2018, Sensity researcher Henry Ajder reported to Rolling Stone Magazine that the ban was largely ineffective as it only banned the label ‘deepfake’ meaning that the content could still be uploaded under another name. Delayed action and inconsistent policy does not adequately protect the lives of those who are victim to deepfake and non-consensual pornography.

We have seen that so much of the media focus on #MeToo has entered around Hollywood and powerful perpetrators. In the case of deepfakes, money and status have no effect on the outcome. Some of the earliest non-consensual pornographic deepfakes are of celebrities including actress and activist Emma Watson, Games of Thrones actress Maisie Williams and Wonder Woman Gal Gadot. Speaking to the Washington Post back in 2018, actress Scarlett Johannson addressed how a deepfake pornographic video of her had been viewed more than 1.5 million times and was presented as authentic, ‘leaked’ footage. Johansson exhausted legal options to no avail, as she simply could not stop the images being reproduced and shared in other countries.

We need to make non-consensual deepfake porn illegal. In the U.K., we are not protected from deepfake as we would be with revenge porn because the sexual image is not the original, but one that merged with another. Although deepfakes are banned in some U.S. states and in some areas of Europe, (in Ireland and Belgium perpetrators are given a prison sentence and are fined) the inconsistencies in the law mean that a fake image of you could still exist online, in another country with more lenient restrictions on this matter.

In addition to the individual trauma this digital sexual violence inflicts, deepfakes pose a direct threat the #MeToo movement’s efforts to hold perpetrators accountable. As Nina Schick writes in her 2020 book Deepfakes: The Coming Infocalypse, we saw how disinformation played a role in Trump’s pussygate. He was able to dismiss the recording as ‘fake’. Shick highlights that as deepfakes become more widespread, if “anyone can be faked” then “everyone has plausible deniability.”

Now, in the U.K., academic and poet Helen Mort is campaigning for the Law Commission to “tighten regulation on taking, making, and faking explicit images”. Helen shared how she discovered photos from her private social media account being used to make graphic deepfake porn. In November, she found out that this had been happening since 2017. This included photos from when she was pregnant with her son and downloaded images from an old Facebook account from when she was 19, none of which contained nudity. On porn websites, the deepfake content even included her name, and the user was actively encouraging others to further distort her images by transposing Helen’s face onto other graphic and violent content. When she contacted the police, Helen was told there was nothing she could do. So, Helen started a campaign to make this intimate image abuse illegal. Since its launch, Helen has already received cross-party support to make non-consensual deepfake porn illegal and feels empowered in speaking up about the clear injustice.

Rather powerfully, she encouraged others to go and discover her work, other victims of non-consensual deepfake porn, and listen to the people behind the images. She said, “I’m not the image on those websites,” Helen said. “I have my own voice.”

We all have our own voices, and when it comes to taking back autonomy of our bodies and safety, it’s high time we use them.

You can sign the petition here: https://www.change.org/p/the-law-comission-tighten-regulation-on-taking-making-and-faking-explicit-images